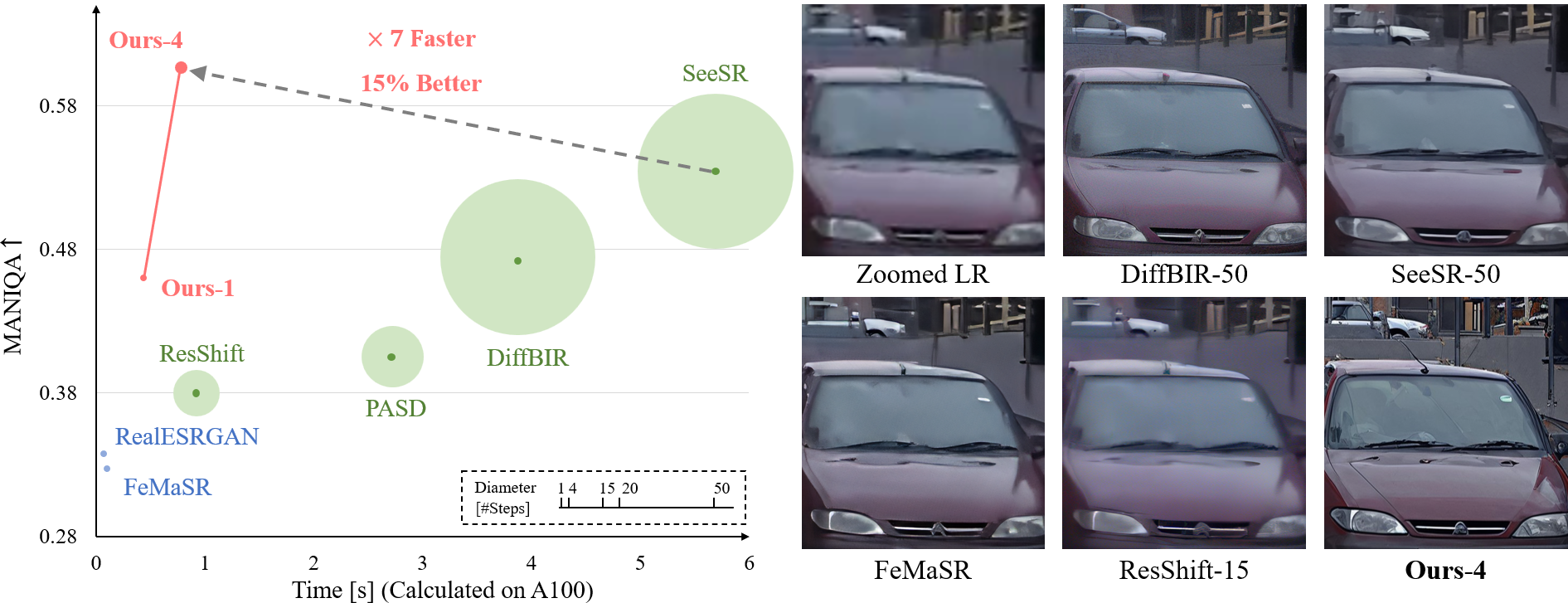

TL;DR: AddSR achieves better perception quality and faster speed compared to previous SD-based super-resolution models within 4 steps by incorporating efficient text-to-image distillation technique (adversarial diffusion distillation) and ControlNet.

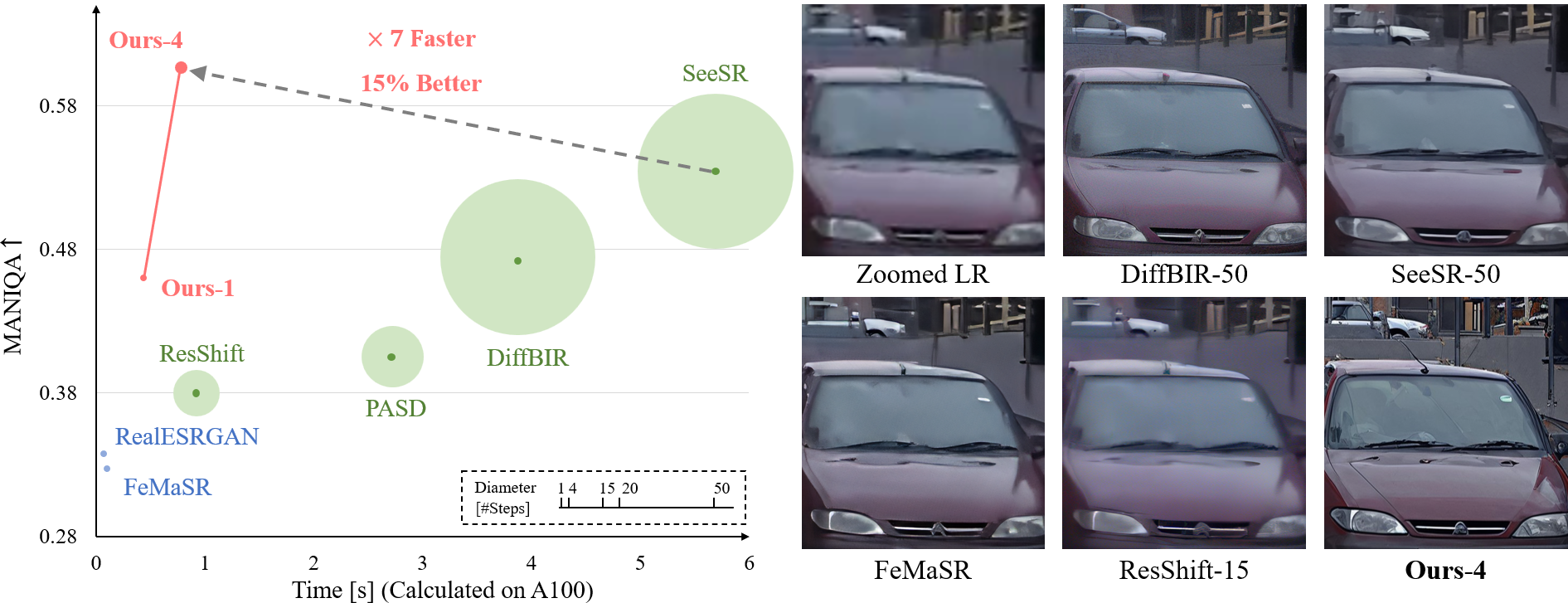

Blind super-resolution methods based on stable diffusion showcase formidable generative capabilities in reconstructing clear high-resolution images with intricate details from low-resolution inputs. However, their practical applicability is often hampered by poor efficiency, stemming from the requirement of thousands or hundreds of sampling steps. Inspired by the efficient text-to-image approach adversarial diffusion distillation (ADD), we design AddSR to address this issue by incorporating the ideas of both distillation and ControlNet. Specifically, we first propose a prediction-based self-refinement strategy to provide high-frequency information in the student model output with marginal additional time cost. Furthermore, we refine the training process by employing HR images, rather than LR images, to regulate the teacher model, providing a more robust constraint for distillation. Second, we introduce a timestep-adapting loss to address the perception-distortion imbalance problem introduced by ADD. Extensive experiments demonstrate our AddSR generates better restoration results, while achieving faster speed than previous SD-based state-of-the-art models (e.g., x7 faster than SeeSR).

Left: LR Image. Right: Restored Image.

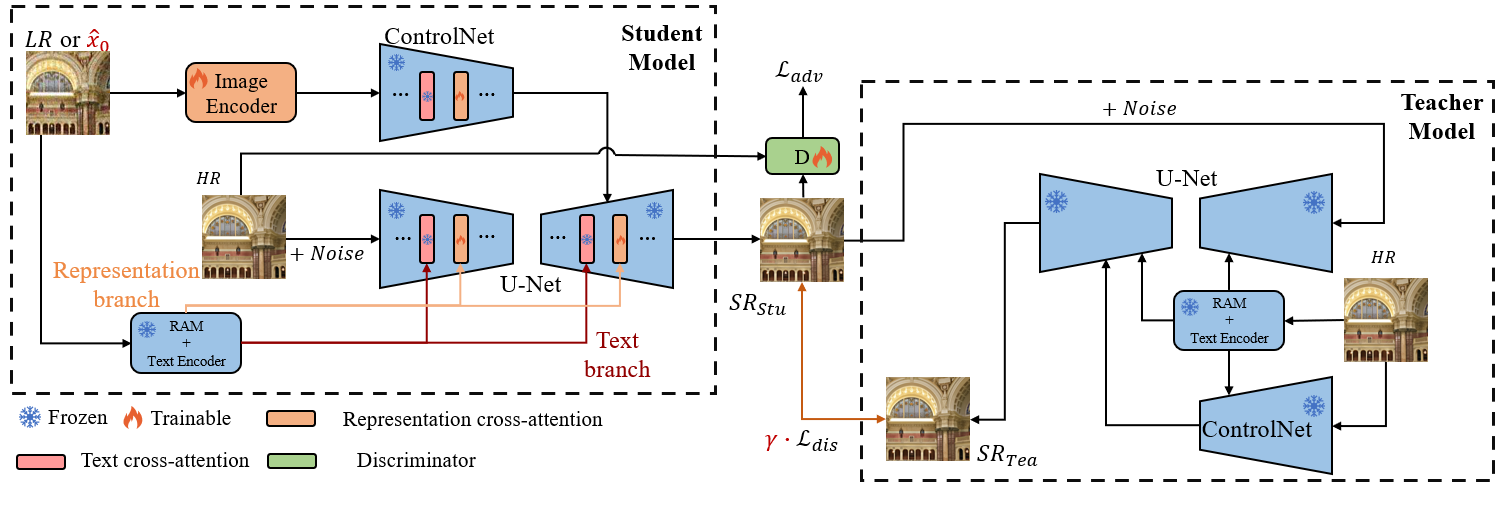

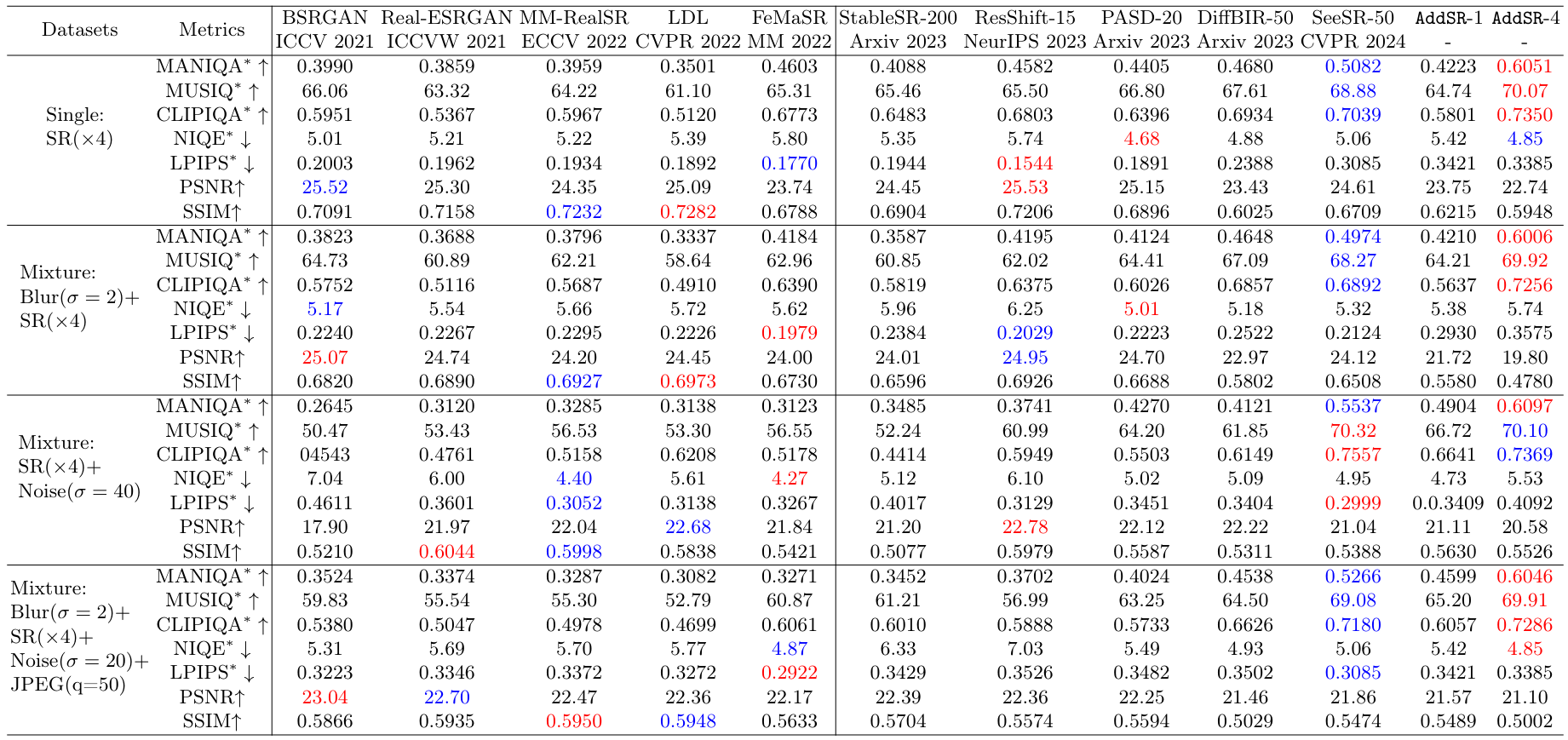

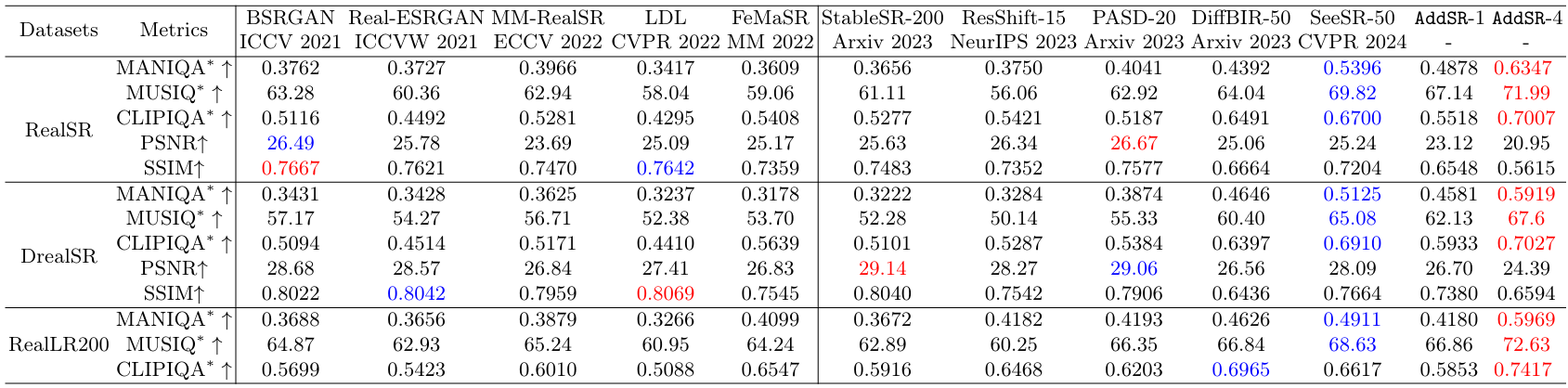

Results on Synthetic LR Images

Results on Real-World LR Images

LR

RAM Prompt vs. Manual Prompt

LR

SeeSR-Turbo-2 vs. AddSR-2

Acknowledgements: The website template was borrowed from Youtian Lin.